Responsible AI with Databricks means scaling innovation without losing control. Today’s leaders need speed, but within rules that protect data, models, and people. This blog explains how Databricks helps organizations strike a balance between fast delivery and strict compliance needs, utilizing platform-level controls specifically designed for the public sector and regulated industries.

TL;DR: Responsible AI Starts with Governed Data on Databricks

Responsible AI depends on governed, high-quality data. Databricks Lakehouse, paired with Unity Catalog, lineage tracking, audit logs, and the MLflow Model Registry, provides a compliant and auditable foundation for AI. These tools align with NIST AI RMF and ISO/IEC 42001, making Databricks suitable for SLED and other regulated sectors. Optimum helps clients operationalize this platform to build AI that regulators and stakeholders can trust.

What Does “Responsible AI” Mean in Practice?

Responsible AI means building systems that are lawful, fair, explainable, and auditable across every step from planning to monitoring. It aligns with frameworks like NIST’s AI Risk Management Framework and ISO/IEC 42001, both of which focus on governance, transparency, and control.

Public-sector and regulated teams need more than good intentions — they need proof. That means tracking decisions, tests, and approvals. A solid, responsible AI framework builds trust by producing evidence of control, which auditors and oversight bodies now expect.

How Does Data Quality Impact AI Compliance?

Bad data leads to biased models, unsafe decisions, and audit failures. High-quality inputs, monitored and verified at every stage, are crucial for meeting compliance standards and ensuring the trustworthiness of AI outputs.

In Databricks, you can define expectations for schema, ranges, null values, and anomalies. Built-in alerts detect drift, and quarantine flows hold bad data for review and analysis. Ownership matters too. Assign clear stewards to manage checks. Strong data quality for AI, combined with solid AI data governance, helps prevent problems before they reach production.

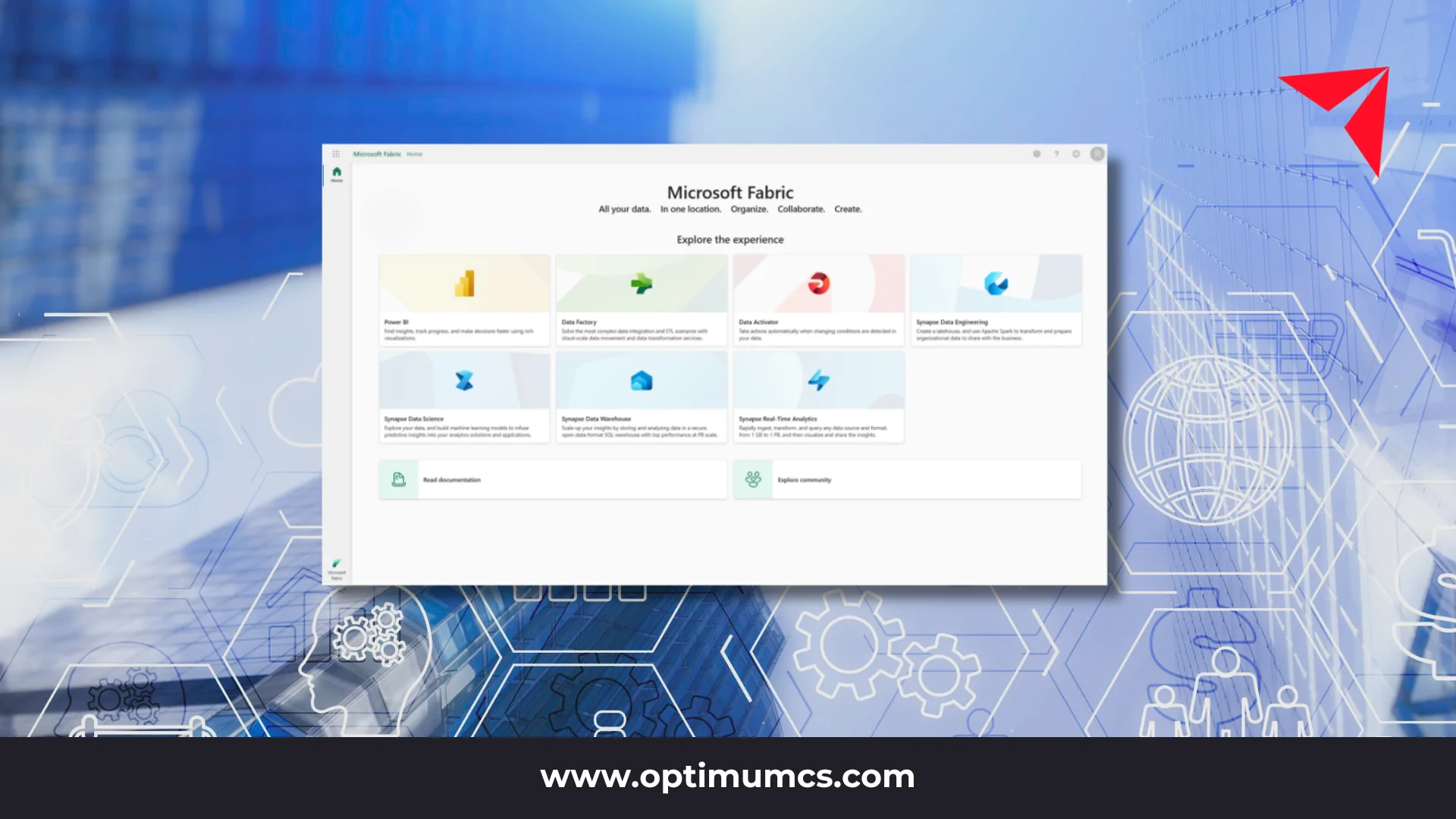

Why Is Databricks Ideal for Responsible AI Development?

Databricks unifies data and AI with governance at its core. Unity Catalog manages access and tracks data lineage in AI. MLflow Model Registry adds stage gates for models. System and account audit logs keep a full record of activity. Together, they support full Databricks compliance and enforce enterprise AI governance.

This setup enables structured model promotion — dev to staging to production — with required tags and approvals. Lineage tools link datasets to features to models. Logs answer key questions: who made a change, what was touched, when, and why. It’s control built into every step.

Checklist: Key Compliance Features to Enable in Databricks

- Lineage maps for datasets, features, and models

- Role- and attribute-based access controls (RBAC/ABAC)

- Table- and column-level classification policies

- Expectation-based data quality checks

- Governed model registry with stage gates and approval tags

- Delta Sharing logs with full audit trails

How Can Organizations Ensure AI Transparency and Auditability?

To stay compliant, you need to maintain traceability across the entire stack. That means tracking inputs, features, models, and inferences. Versioning, approval logs, and event tracking all play a role in AI model transparency and AI risk management.

Databricks supports this with model cards, decision logs, and run IDs for reproducibility. The feature store links features back to source data. Central logging policies keep a history of access, approvals, and outputs. These tools help teams answer who approved what, when, and based on which data.

What Are the Key Steps to Implementing a Compliant AI Strategy?

Start by mapping controls to frameworks like NIST AI RMF and ISO/IEC 42001. Then build those controls into Databricks workflows. Monitor outputs, test regularly, log activity, and document every step. These actions align your program with audit and AI regulatory compliance needs.

Success requires a cross-team effort. Security, data, legal, and compliance leads must work together. Prepare for new laws, including themes from the EU AI Act. Build audit-ready artifacts, such as lineage records, approval logs, and data quality checks. These enable faster oversight and reduce long-term risk.

6-Step Rollout Plan

- Map risks and controls to NIST AI RMF and ISO/IEC 42001.

- Centralize data and permissions with Unity Catalog.

- Add expectations and monitoring to all data pipelines.

- Register models, require tags and approvals, and set up stage gates.

- Turn on audit logs; define retention periods and alerts.

- Document every process, run mock audits, and fix gaps.

Traditional AI vs. Responsible AI Architecture (Quick Comparison Table)

| Area | Traditional AI | Responsible AI with Databricks |

| Data | Siloed and fragmented | Governed with full lineage |

| Quality | Ad-hoc checks | Enforced expectations and monitoring |

| Models | Manual promotion | Registry gates with approvals |

| Logs | Scattered and limited | Unified, queryable system logs |

| Compliance | Reactive fixes | Built-in, audit-ready by design |

FAQs: Answers for Risk-Conscious Teams

How does Databricks help maintain AI compliance?

It enforces governance with lineage tracking, audit logs, and model registry gates. Approval logs, for example, capture who promoted a model to production and when.

What are the risks of scaling AI without governance?

You risk bias, data leakage, opaque decisions, and regulatory penalties. One common issue: unapproved models going live with outdated or biased inputs.

How can we improve data quality for responsible AI?

Set clear expectations, monitor for drift, use quarantine flows, and assign data stewards. Drift alerts help catch broken pipelines before they impact outcomes.

Which regulations affect public-sector AI?

NIST and ISO frameworks apply, along with privacy laws and EU AI themes. Staying audit-ready means logging actions, documenting approvals, and testing processes.

Is responsible AI a drag on innovation?

No. Governance accelerates the delivery of safe and repeatable models. Teams work faster when every step is documented and trusted.

Book a Databricks Governance Assessment with Optimum

Regulated teams don’t need more tools. They need control. Contact Optimum to schedule a Databricks governance assessment. See how your current setup compares and get clear next steps to build responsible AI with Databricks that’s secure, auditable, and ready for real-world oversight.

About Optimum

Optimum is an award-winning IT consulting firm, providing AI-powered data and software solutions and a tailored approach to building data and business solutions for mid-market and large enterprises.

With our deep industry expertise and extensive experience in data management, business intelligence, AI and ML, and software solutions, we empower clients to enhance efficiency and productivity, improve visibility and decision-making processes, reduce operational and labor expenses, and ensure compliance.

From application development and system integration to data analytics, artificial intelligence, and cloud consulting, we are your one-stop shop for your software consulting needs.

Reach out today for a complimentary discovery session, and let’s explore the best solutions for your needs!

Contact us: info@optimumcs.com | 713.505.0300 | www.optimumcs.com